CONTRIBUTED BY

Karolina, SolveStack.ai Team

DATE

Dec 23, 2025

When growing SolveStack.ai and its database of innovative AI applications, we often encounter use cases that extend beyond immediate business value. We meet founders who are applying this technology to build a more sustainable and safer future. By highlighting these impact-driven and public-interest applications, we hope to challenge the growing pessimism around AI’s social implications and show how the technology can fuel work for the common good, beyond commercial goals.

In today’s blog about an interesting AI for good application, we ask:

Can AI go as far as helping to prevent violence? How? And where are its limitations?

We discussed these questions with Anna Juusela, CEO and Founder of We Encourage, an impact company using tech to provide guidance and mental-health support for people affected by violence, the company behind AinoAid™.

Anna’s experience in building companies together with passion for preventing violence and empowering women led her to establish We Encourage. Together with Ellimari Kortman, Ulla Koivukoski, and Katja Kytölä, they make up a team that is a blend of experience at the intersection of technology, social services, research, crisis work, entrepreneurship, and therapeutic practice.

Each of us believes deeply in the same goal: to make safety, support, and reliable information accessible to anyone affected by violence—anytime they need it.

What is AinoAid™ and how does it work?

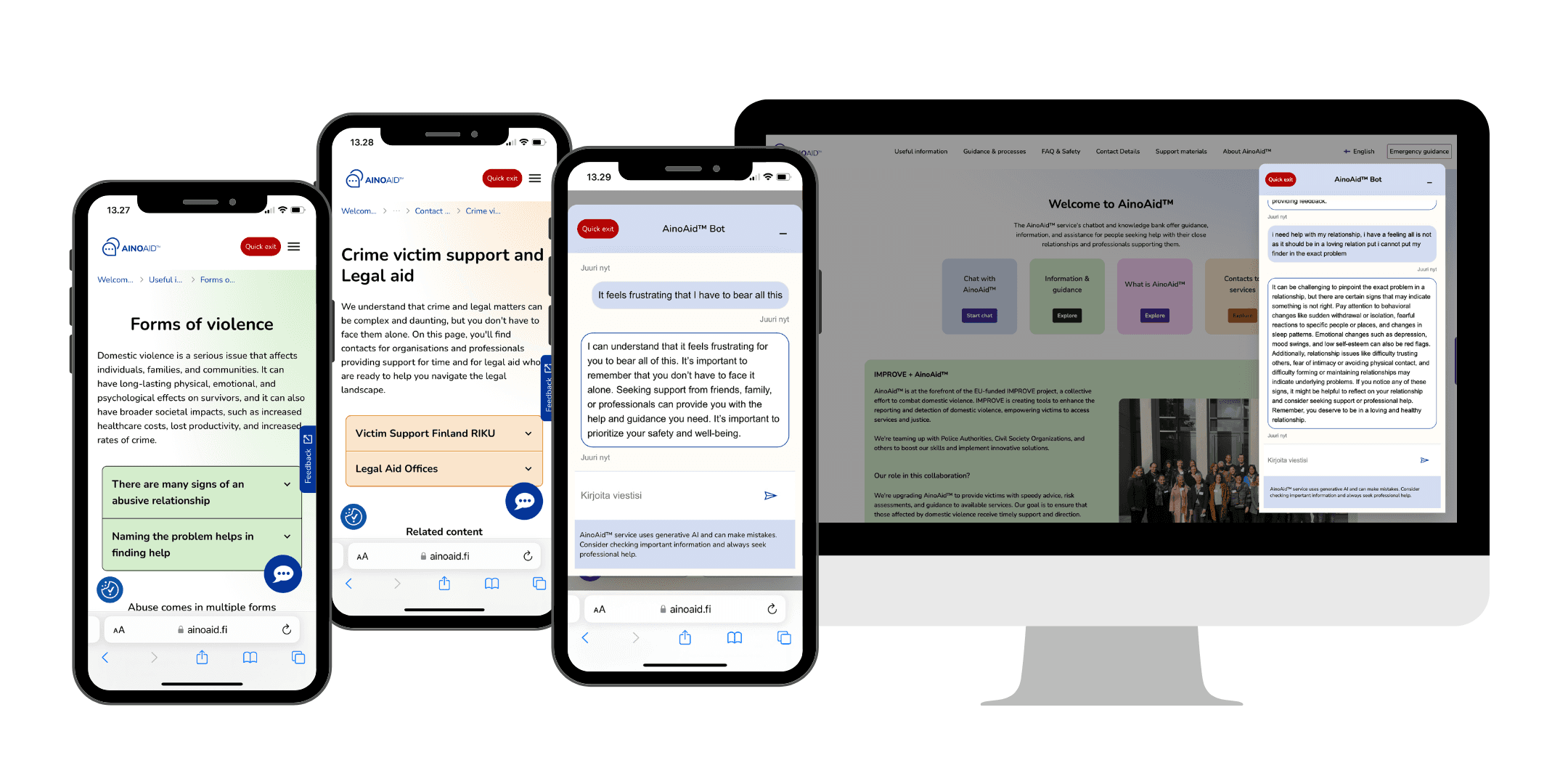

AinoAid™ is a browser-based, two-part service that supports people experiencing violence and the professionals who help them. It currently focuses on domestic violence, with plans to expand in 2026 to cover human trafficking related to sexual exploitation and, more broadly, violence in general.

AinoAid™ includes:

AI chatbot offering psycho-social support, helping users recognize violence, assess risk, understand their rights, find support services, and learn safety measures.

An information bank providing accessible, reliable content on domestic violence, including recognition, risk assessment, safety planning, legal processes, and support services, accessible with or without the chat.

For survivors, AinoAid™ offers support, guidance, survivor stories, and clear, contextual pathways to help and safety. For professionals, it provides educational resources and practical tools for identifying victims, assessing risk, and offering sensitive, effective support.

The service works on any device directly through a web browser at ainoaid.fi, with no downloads required.

How is AI driving this project?

AI is used primarily to power the AinoAid™ chatbot, enabling supportive, natural-feeling conversations. The model has been co-created and trained with domestic-violence survivors, therapists, and psychologists to mirror trauma-informed communication and therapeutic interaction patterns. Its training and fine-tuning was supported by the EU-funded IMPROVE Consortium.

AI helps the chatbot to understand the user’s input and provide personalized suggestions, recommendations, and guidance. It is able to direct users to the most relevant information or services. Lastly – it helps to verbalize complex situations and assists users in making sense of their experiences. This is particularly important for the persons experiencing violence, as they may struggle in using words to describe what happened to them.

Such functionality brings up a lot of ethical questions. There is a lot of fear preventing people from reporting violent experiences. Adding to that scepticism related to new technology makes us think… will it work?

Anna replies:

AinoAid™ is intentionally designed with strict safety and ethical principles. The AI’s limitations and potential for error are openly acknowledged. It does not replace human professionals; instead, it guides users toward appropriate resources. As usage grows, the system will continue to improve through expert input and evolving pointers to verified sources. We recognize that seeking help for violence, for example, domestic violence is already emotionally difficult, and introducing technology into such moments can create additional fear or hesitation. Our approach is to make AinoAid™ feel as safe, transparent, and human-guided as possible.

In practice, AinoAid™ addresses these concerns through the following methods:

Radical transparency by design

The chatbot clearly introduces itself as an AI assistant, explains how it works, and sets clear boundaries around what it can and cannot do. It also provides guidance on safe use, helping users feel informed and in control from the very first interaction.Survivor-led development

The service is built on real needs identified through survivor interviews conducted during the EU-funded IMPROVE project. These insights shaped both the content and interaction design, so the service reflects lived experiences rather than abstract assumptions.Strong privacy and secure AI infrastructure

Users are instructed not to share personal details, and any such information is automatically removed using Microsoft’s PII-detection tools. No identifying data is stored, and cookies can be easily cleared. The system is monitored under the Microsoft Responsible AI framework, includes mechanisms to interrupt unsafe conversations, and has been cybersecurity tested to Class A standards.Ethical oversight and human review

Conversations are periodically reviewed by trained human professionals, so that they can remain accurate, supportive, and free from harmful bias.Positioned as support, not replacement

Founders are transparent about the fact that AinoAid™ does not replace human professionals. It acts as an additional, complementary resource for survivors, professionals, and others involved, helping guide users toward appropriate human support. The team actively engages with professionals in the field to address concerns, build trust, and continuously refine how the service supports real-world practice.

What AI can assist with and what it cannot/shouldn't be helping with? How is this division addressed at AinoAid™?

Anna shares: AinoAid™ is the first aid, not intended for long-term psychotherapeutic support.

AinoAid™ can help and support a person who is exploring their situation and wondering, for example, whether their experience in the relationship is normal or whether it could be domestic violence. They can discuss their own experiences, feelings and thoughts with the chatbot or read the information they need from the knowledge base. The user can think about their own options with the chatbot, they can get information about what to consider, for example, when separating from a violent partner or what kind of help they can get from child protection services. With the help of AinoAid™, you can make a safety plan for yourself and a plan for how to break away from a violent relationship.

But…

Itis not suitable for a situation where there is an acute threat of violence. No information is transmitted to the authorities through AinoAid™, so in an emergency you must call the emergency number. With AinoAid™ you can begin to understand the risks of your own situation and what might predict violence. In a dangerous situation, it is good to remember the instructions you have received, escape as soon as possible and seek help. AinoAid never replaces a human being. AinoAid reminds you of this and gently directs you to turn to a professional.

What is next for AinoAid™?

The service is currently available in Finland, Réunion (France), Germany, Austria and Spain. In 2026 it will be available also in the French mainland and Italy. What is meant by availability in these regions is the ability of the system to work accurately in these geographical contexts, considering the specific legal and support infrastructures.

Next steps entail growth and outreach to more individuals at risk.

For us, responsible scaling means expanding AinoAid™ in a way that protects vulnerable users, maintains high ethical standards, and ensures the service remains safe, accurate, and trustworthy as it reaches more people.

If you want to learn more, try asking AinoAid™ yourself.

If you need support, don’t hesitate to reach out.

This conversation reflects our broader mission at SolveStack.ai, that goes beyond our business objectives. We also want to surface impact-driven, public-interest AI applications that counter fear-based narratives and demonstrate how thoughtful, ethically designed technology can contribute to the common good when guided by human expertise and real-world needs.